I've been thinking about the old maxim "correlation is not causation." Skeptics tend to push a strong interpretation of the maxim. The skeptics say significance doesn't emanate from behavior; the phenomena of the world refuse to furnish their own meaning. Working scientists on the other hand tend to be more pragmatic. Scientists usually understood it more as an admonition than a prohibition. A scientist might say that assigning causes to effects is okay, just be sure that the linkage is valid. Correlation might be causation, says the scientist, provided that two measurements are indeed causally related.

For a long time prior to the Twentieth Century, the skeptic's prohibition was dominant. In the Eighteenth Century, David Hume famously argued that there's no way to link definitively a set of input events with a set of consequences. If two things tend to happen together we might say there is a "constant conjunction" between them, but, beyond that, there's no way to say they are linked through a cause. So while "correlation is not causation" has a specific, practical meaning (don't extract inappropriate conclusions from data), it also has a more general Humean meaning (data will only ever be constant conjunction, nothing more).

Later, with the turn to probability and statistics in the Twentieth Century -- consider for example the importance of probability in quantum mechanics -- the Humean consensus began to unravel. It's not that Hume's problem was solved. On the contrary, no human has yet invented a cause-effect detector. Rather, Hume's problem was rendered obsolete by practical circumstance. People simply stopped caring; they stopped worrying about whether truth was logical. In fact they stopped caring about truth.

Today's data scientists and AI researchers are, in a sense, radical Humeans, in that they still haven't produced any scientific proof (mechanical, logical, or otherwise) for linking a cause to an effect. Nevertheless such scientists act as if they are anti-Humeans. They assert positive conclusions based on correlation and correlation alone.

Overheard at the Googleplex: no, we don't believe in "cause" any more, at least not in the metaphysical sense; but, if we did believe in it, correlation most certainly *is* causation. Indeed, Google's whole business model -- along with Amazon and scores of companies like them -- relies on the fact that correlation is causation, or at least that correlation is meaningful. Wendy Chun has traced this development back to a paper by Paul Lazarsfeld and Robert Merton from 1954 where they put forward the notion of "homophily," or the tendency to prefer those similar to oneself. Since then, "being similar" -- which is to say correlation -- has swelled in significance, and the notion that correlation might be mere arbitrary conjunction has nearly collapsed. If a data point is "near" another data point, such nearness is considered significant. Nearness is enough now. Similarity equals significance.

(As Chun and others have pointed out, network science has also been frequently used to model segregation in populations. So this isn't just an abstract discussion unconnected from real political consequences. The same is true for cellular automata simulations, for instance in Axtell and Epstein's book Growing Artificial Societies [1996].)

Technically speaking, things like "meaning" or "cause" are excised from the data paradigm; they are just nonsense words in that discourse. Under the data science paradigm, meaning has become a pragmatic question. "Meaning" means, roughly, "does it do the things we want?" If so, the outcome is retroactively termed "meaningful" or "caused" or "intelligent" or what have you.

All this raises the hackles of humanists, since humanists are supposed to care about things like meaning. And indeed humanists have raised lots of objections when it comes to the foundations and implementation of data science. (For the most part, many scientists simply ignore these objections, which is frustrating. We read them but they rarely read us.) I would summarize the meaning problem in the following way. First, no one in the history of humanity (!) has yet figured out a way to mechanize meaning. If they had we'd know about it already. Of course machines are very good at doing certain things. They can store and retrieve numbers. They can add and subtract. They can implement logical operations. But no one has yet patented a Meaning Machine. (The reasons for this are complex: the success of formalism in early-twentieth-century mathematics is one part of the story; Claude Shannon's information theory is another; the definition of the monad in Leibniz or Euclid is yet another.) So we have a problem with meaning and signification more generally, namely that machines aren't very good at it, at least not by themselves.

These objections aside, it struck me that someone like Quentin Meillassoux would have an even stronger rejoinder to the data science model. I don't know if he has written about it, but for Meillassoux correlation is certainly not causation, and in fact correlation isn't even correlation! His is a kind of hyper-Humean position in which, first, the data of nature are contingent -- no surprise there -- but also, second, the very laws of coherent nature are contingent as well (even prior to the thorny question of "interpretation").

Consider Meillassoux's so-called principle of unreason, that "there is no reason for anything to be or to remain the way it is" (After Finitude, 60). The reference here is to the principle of sufficient reason (PSR), one of the mainstays of philosophy going back to Leibniz and indeed further to the ancients. The PSR is typically given as a lurid narrative of origins: "a reason why"; I take the essence of the PSR slightly differently to mean the coincidence of being and thinking, or, if you like, the coincidence of being and relating. Not so much "a reason why," the essence of the PSR entails an even more basic claim, that being and thinking ought to coincide at all. (I want to reduce the PSR in this way because I consider cause, reason, and relation all to be of the same basic type, the type called "thinking.") Meillassoux's principle of unreason directly attacks the PSR by claiming that there need not be a reason why, not a cause at all. In fact it's even more extreme than that: no reason why, but also the impossibility of ever having a coherent reason, of ever finding necessity within being. Thus if the classical position entails "a reason why," Meillassoux's position is "for no reason."

(Meillassoux is a virtuoso on the page, most certainly, although his project is just garden variety nihilism taken to the n-th degree. One's affection for Meillassoux will thus hinge on one's interest in nihilism. Personally I'm a fan, except that nihilism doesn't work all by itself. Nihilism must always be supplemented by a complementary expression of re-enchantment and intensity, be it romanticism, poetic creativity, the force of will, or something else.)

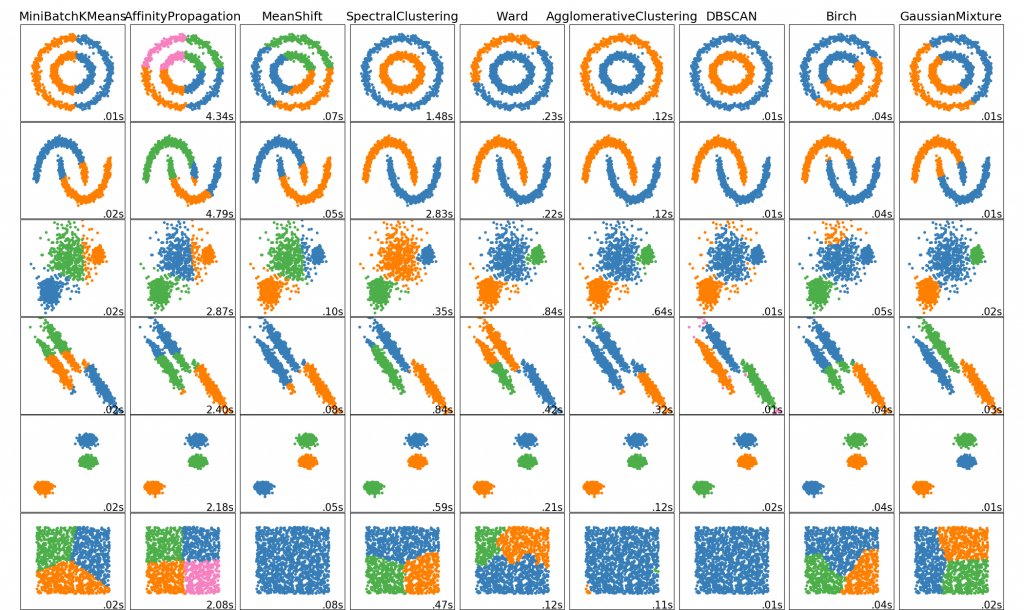

I take two things from this. First, while Meillassoux most certainly endorses a strong mathematical model (see After Finitude, 3, 11, 26, and passim), the version of mathematical absolutism he proposes undercuts the ability for data science to make definitive claims. If a clustering algorithm can't show causation, and can't even show correlation -- no matter how neatly it clusters a set of data points -- then what is it good for? (Two cheers for Meillassoux.)

Second is something I've said before but it's worth saying again: the digital does not exist without the principle of sufficient reason. The coincidence of being and relation -- whether proven or taken on faith -- is a natural precondition for the digital. This is evident in the foundations of arithmetic and in many other places. Which means if you're seeking the digital, look for evidence of the PSR, that is, look for a substrate in which relation coheres. In the reverse sense, if you're seeking to eliminate the digital, look for some condition in which relation does not cohere. There are many examples of the latter, the most well known being analog anti-philosophy, the non-standard method, and dialectics.

At the same time Elie Ayache's The Blank Swan might be worth considering since he specifically discusses new technologies (via fintech), and since he has taken Meillassoux in new directions. I'm not yet sure...