tl;dr: Carnivore is up and running -- so happy coding!

I've been getting a lot of email recently about Carnivore and Kriegspiel, the two software projects that I've spent the most time on over the years. As anyone who's released software knows, it's unclear how long code should be maintained. A few years? Five? Ten? I've gone back and forth about whether to maintain these projects. The Kriegspiel beta went offline a few years ago and is due for a major overhaul. Carnivore was first launched over fifteen years ago -- that's some old code! -- and has changed dramatically as networks themselves have changed. So here's the latest update on Carnivore. I'll post a separate update for Kriegspiel.

Carnivore effectively went dark a few years ago with the industry's gradual migration toward 64 bit computers. The reason is that Carnivore relies on a native library that bridges between the Java code and the network adapters. Unfortunately that library hasn't been maintained since 2004 and it had stopped compiling. The good news is that I started messing around with the source code for the native library a year ago and after some headaches -- my experience with debugging and compiling this stuff is essentially zero -- I got it to compile! So Carnivore should be up and running for modern (i.e. 64 bit) machines. Simply download Processing, and load Carnivore as a library.

Still, networks have changed dramatically in the last fifteen years, and this affects how Carnivore works and what it can do. Two things worth noting: (1) the death of promiscuous mode, and (2) the hegemony of HTTP.

Death of Promiscuous Mode

"Promiscuous mode" is a setting on a computer's network adaptor. When promiscuous mode is inactive -- the normal setting -- the computer will hear only data explicitly addressed to it. With promiscuous mode active, the computer is able to hear all data in the immediate surroundings. Promiscuous mode can be very useful, for instance when trying to debug a problem with network traffic. But it can also be a security liability, since eavesdroppers can listen to data intended for others.

It used to be that ethernet/wifi routers were designed to facilitate promiscuous mode. They broadcast their packets -- sort of like how a radio tower broadcasts a signal for anyone within earshot to hear. This meant that you could listen to all the data traffic on the local area network. However due to increased security concerns and general changes in the industry, routers have gradually migrated to a "switched" rather than broadcast model, meaning that data is sent only to the intended receiver.

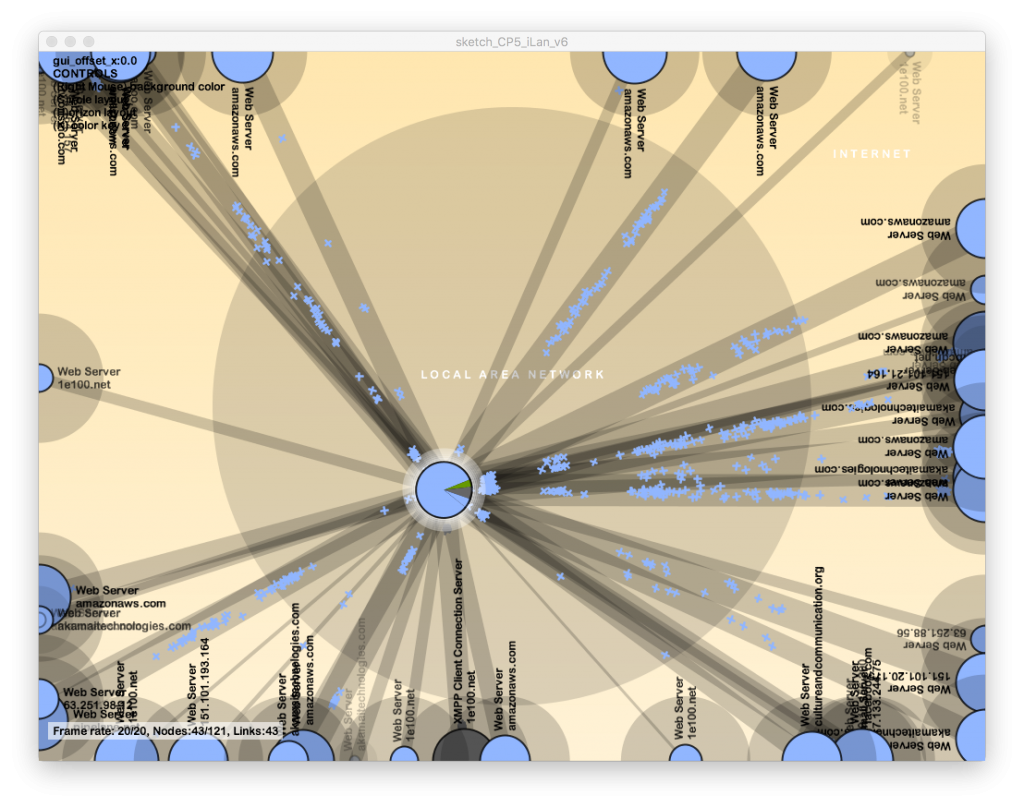

In other words, if you run Carnivore today chances are you'll only hear your own traffic. (Folks on older routers won't have this problem.) Carnivore is still "working," but it doesn't get to hear as much as it used to because the infrastructure itself has changed. You can eavesdrop, but only on yourself. A fitting metaphor for our times?

HTTP Hegemony

Narrowcast instead of broadcast -- that's one shift I can identify. Another shift is a narrowing in the use of ports. It used to be that you turned on Carnivore and you'd see a wide spectrum of ports. The printer would be talking over here. The router would be chatting over there. Email would be doing one thing, ftp and telnet another thing. There was a rich protocological landscape.

Over the last ten to fifteen years, the industry has migrated from protocols to platforms. (Dramatic consequences ensue, which I won't discuss now; for instance, protocols are open and non-proprietary, while platforms tend to be commercial.) The best example of this is probably the shift from personal computers to cloud services. Or, if you like, the shift from Microsoft to Google: they both make operating system software, it's just that Google's software lives primarily in the cloud, while Microsoft's lives primarily on your device. (At a certain point Google realized that the browser was their operating system; even Google devices are essentially browser-oriented devices.)

The cloud, web services, network APIs, these constitute the new digital landscape, replacing what used to run locally on a personal computer. For example, in the past it was much more common for email to follow the POP protocol. That meant that email had its own port, its own protocol, and typically its own client application (like Mail or Eudora). These days, POP is less common, as users have migrated to cloud-based services like GMail, or, interestingly, discarded email altogether for other kinds of platform-based communication (social media and chat platforms).

So if you run Carnivore today, you will likely see a ton of Web traffic -- port 80 is customary for Web/HTTP -- and relatively less traffic on other ports. The reason is that the cloud is essentially one enormous Web service.

There's a Carnivore Client I use for testing that lights up in different colors for different ports. It used to be multicolored. But these days when I run it, it's monochromatic: all blue for one port, HTTP.

To summarize: narrowcast instead of broadcast (which killed promiscuous mode); and funneling services through the Web (which killed, shall we call it, "protocological biodiversity").

What does this mean for people making Carnivore Clients in Processing? I'm not sure. One option would be to use Carnivore for a quantified self project. What's my data diet? How does the infrastructure respond when I surf in the browser? Ultimately it's up to you to be creative within these limitations.